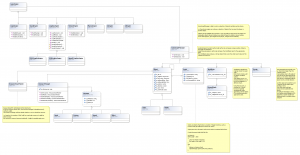

An outline of a simple zoomable heightmap generation algorithm:

- create a 256×256 noise map

- upon zooming in, create a new one as soon as the zoom factor reaches (1.5 x iteration level)

- sample it at the respective coordinates to make it serve as the base for the new texture

- use global coordinates (relative to the sphere) to create new texture iteration

- iteration++

- when zooming further in towards (2x iteration level), blend the new texture over the old one to hide transition artifacts

- when zooming out again, blend it out between (2x iteration level) and (1.5x iteration level)

- discard the texture upon reaching (1.5x iteration level)

using this approach, we will only have 256×256 heightmaps in graphics memory, albeit n of them.

i.e. if the target planet has a circumference (edge size) of  40’000km (earth), and the maximum zoom level would be 1m edge size, we would end up with approx. 25 textures.

One approach would be to only keep the minimum amount of textures (thus limiting the view distance) in memory and discarding the rest, re-rendering them on demand.

The same algorithm can be applied to movement parallel to the ground: if the camera is moved further than (0.25x edge width of current iteration), a new texture at the same iteration level but with new base coordinates is computed and blended over the old one between (0.25x edge width) and (0.33x edge width)